Wilem Ligtenberg – GPU computing and R

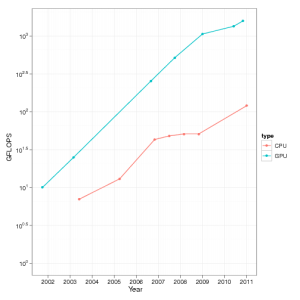

Why GPU computing? Theoretical GFLOPs for a GPU is three times greater than a CPU. Use GPUs for same instruction multiple data problems (SIMD). Initially GPUs were developed for texture problems. For example, a wall smashed into lots of pieces. Each core handled a single piece. CUDA and FireStream are brand specific. However, OpenCL is an open standard for GPUs. In principle(!), write code that works on multiple platforms and code.

Current R-packages:

- gputools: GPU implementation of standard statistical tools. CUDA only

- rgpu: Dead.

- cudaBayesreg: linear model from a bayes package.

ROpenCL is an R package that provides an R interface to the openCL library. Like Rcpp for OpenCL. ROpenCL manages the memory for you (yeah!). A little over a week ago the OpenCL package was published on CRAN by Simon Urbanek. The OpenCL is a really thin layer around Open CL. ROpenCL should work out of the box on Ubuntu, not sure of Windows.

Pragnesh Patel – Deploying and benchmarking R on a large shared memory system

Single system image – Nautilus: 1024 cores, 4TB of global shared memory, 8 NVIDIA Tesla GPUs.

- Shared Memory: symmetric multiprocessor, uniform memory access, does not scale.

- Physical distributed memory: multicomputer or cluster. Distributed shared

- memory: Non-uniform memory access (NUMA).

Need to find parallel overhead on parallel programs. Implicit parallelism: BLAS, pnmath. Programmer does not worry about task division or process communication. Improves programmer productivity.

pnmath (Luke Tierney): this package implements parallel versions of most of the non-rng routines in the math library. Uses OpenMP directives. Requires only trivial changes to serial code. Results summary: pnmath is only worthwhile for some functions. For example, dnorm is slower in parallel. Weak scaling: algorithms that have heavy communication do not scale well.

Lessons: data placement is important, performance depends on system architecture, operating system and compiler optimizations.

Reference: Implicit and Explicit Parallel computing in R by Luke Tierney.

K Doi – The CUtil package which enables GPU computation in R

Windows only

Features of CUtil:

- Easy to use for Windows users

- overriding common operators;small data transfer cost;

- support for double precision.

Works under Windows. A Linux version is planned – not sure when. Developed under R 2.12.X and 2.13.x.

Implemented functions: configuration function, standard matrix algebra routines, memory tranfer functions. Some RNG are also available, eg Normal, LogNormal.

“Tiny benchmark” example: Seems a lot faster around 25 times. However, the example only uses a single CPU as it’s test case.

M Quesada – Bayesian statistics and high performance computing with R

Desktop application OBANSoft has been developed. It has a modular design. Amongst other things, this allows integration with OpenMP, MPI, CUDA, etc.

- Statistical library: Java + R.

- Desktop: Java swing

- Parallelization: Parallel R

Uses a “Model-View-Controller” setup.

Please note that the notes/talks section of this post is merely my notes on the presentation. I may have made mistakes: these notes are not guaranteed to be correct. Unless explicitly stated, they represent neither my opinions nor the opinions of my employers. Any errors you can assume to be mine and not the speaker’s. I’m happy to correct any errors you may spot – just let me know! The above paragraph was stolen from Allyson Lister who makes excellent notes when she attend conferences.

For speed comparisons, do the authors compare a GPU with a multi-core CPU. In many papers, the comparison is with a single-core CPU. If a programmer can use

For speed comparisons, do the authors compare a GPU with a multi-core CPU. In many papers, the comparison is with a single-core CPU. If a programmer can use