Ratio-of-uniforms

This post is based on chapter 1.4.3 of Advanced Markov Chain Monte Carlo. Previous posts on this book can be found via the AMCMC tag.

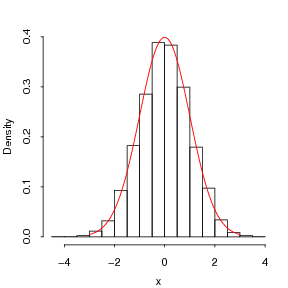

The ratio-of-uniforms was initially developed by Kinderman and Monahan (1977) and can be used for generating random numbers from many standard distributions. Essentially we transform the random variable of interest, then use a rejection method.

The algorithm is as follows:

Repeat until a value is obtained from step 2.

- Generate

uniformly over

.

- If

. return

as the desired deviate.

The uniform region is

In AMCMC they give some R code for generate random numbers from the Gamma distribution.

I was going to include some R code with this post, but I found this set of questions and solutions that cover most things. Another useful page is this online book.

Thoughts on the Chapter 1

The first chapter is fairly standard. It briefly describes some results that should be background knowledge. However, I did spot a few a typos in this chapter. In particular when describing the acceptance-rejection method, the authors alternate between and

.

Another downside is that the R code for the ratio of uniforms is presented in an optimised version. For example, the authors use EXP1 = exp(1) as a global constant. I think for illustration purposes a simplified, more illustrative example would have been better.

This book review has been going with glacial speed. Therefore in future, rather than going through section by section, I will just give an overview of the chapter.